My First “Real” Computer

One of the “ice breaker” type of questions I ask in beginning computer science courses is “What was the first computers that really got you interested in programming?. That leads us to talking about the history of computing and how computing has evolved over the past century.

The two machines that moved me from the “this is fun” to the “this can be a profession” mindset were computers we had in the lab I was part of in my first attempt at graduate school: the Tektronix 4406 and Texas Instruments Explorer LISP Workstation.

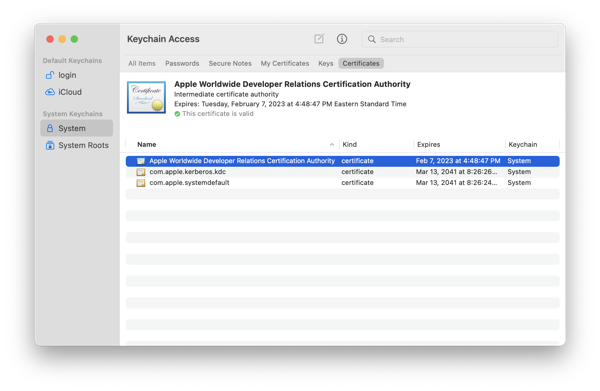

The Tektronix 4406 Smalltalk Workstation

Most people know Tektronix for their test equipment, with their oscilloscopes being the thing that most people remember. They still make decent gear. But in the mid-to-late 1980s, Tek was a bit of a corporate dilettante with their hands in a number of things. One of those was a foray into the workstation market with machines based on the Motorola 68000 processors.

Tek was an early player in the Smalltalk ecosystem and by late 1980s was producing a Smalltalk focused 68000 workstation that ran a rather hacked-up version of Bell Labs Version 7 UNIX. My M.S. thesis advisor was an A.I. sort and had managed to get grant money to get one of these machines. He had moved on to other things by this point and just told his graduate students to have fun with it. I had been following Smalltalk since reading a popular press article on the Dynabook project and was seriously geeked out about being able to play with it. This was my first big dive into the Smalltalk language, the Smalltalk programming environment, and the UNIX operating system.

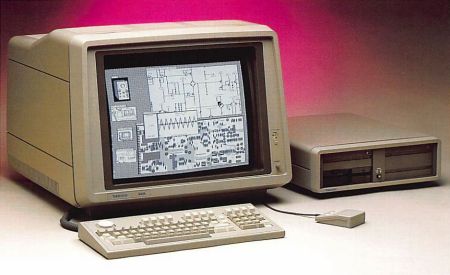

The Texas Instruments Explorer LISP Machine

The thing that had captured my advisor’s attention was a TI Explorer LX Lisp Machine. LISP Machines were interesting beasts as their processors and architecture was designed and optimized to run LISP. Most of the CAD tools for chip design from this period were built using LISP and so TI licensed a design from LISP Machines Int’l. to create the TI Explorer product line. One of the innovations in this design was that it used the NuBus bus architecture, which was one of the early expansion bus architectures. So the LX included a co-processor card that that was basically an independent 68000 UNIX workstation running AT&T UNIX System III.

My boss told me on my first lab in the lab: “Make it work.” So what do I on my first day? Inadvertently do a “rm -rf *” in the root directory on the UNIX co-processor after spending about four hours loading the OS image from tape. Oops, but what can I say as I was definitely a noob at the time.

Fun machine, tho’, as I got to experience the LISP Machine environment and run EMACS as God and Stallman originally intended. A large part of the really weird stuff we see today in GNU/Emacs was a straight UX port from the LISP Machine. Things like the “CTRL-Windows-Alt” modifier keys (CTRL-SUPER-META on the LM). And the operating system was written entirely in LISP and you had the complete source code. And a lot of my sysadmin experience came from having to figure out to make that co-processor work.

The Result

One of the things that got me hired at NCR was they were looking for people with Smalltalk experience. And a lot of the people who worked on the Smalltalk team at Tek migrated to NCR when NCR tried to build a UX Research Center in Atlanta in the late 80s-early 90s. And getting exposed early to these programming environments and people who knew how to use them made me a better programmer.

Selah.